How To Run AI Models on Raspberry Pi Locally Without Internet

AI has become an internal part of the modern world. The craze is so unbelievable that the Raspberry Pi Foundation (a Non-profit organization) has to release an AI Hat+ addon for its Pi board. In a press release, the Raspberry Pi Foundation stated that on the new AI Hat+ board, you don’t need any extra hardware to run AI models on Pi locally.

The AI Hat+ model can be used for running an SLM (small language model) using CPU, the toke generation is comparatively slower than other hardware but several small million-parameter models run pretty well. So without wasting time, let’s learn how to run an AI model on Raspberry Pi.

How To Run AI Models on Raspberry Pi Without Internet

Before starting the project you will need the following things.

What You Need:

Raspberry Pi board with 2GB RAM

SD card 8GB storage.

Let’s start the process,

Install the Ollama Framework

First setup the Pi board, and open the terminal. Run

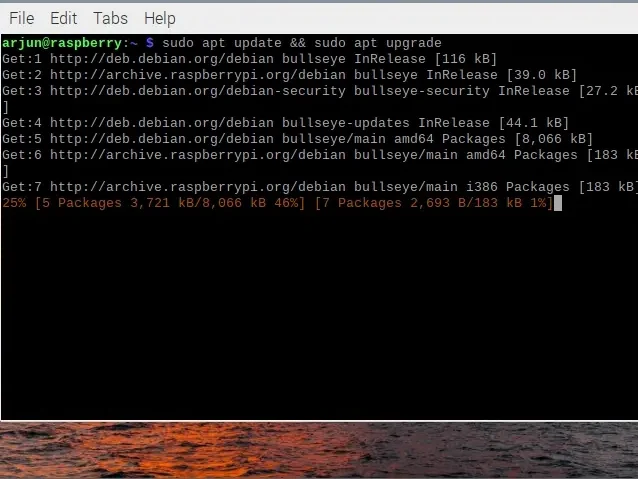

sudo apt update && sudo apt upgrade

This command will update all the packages on the Pi board.

Now run

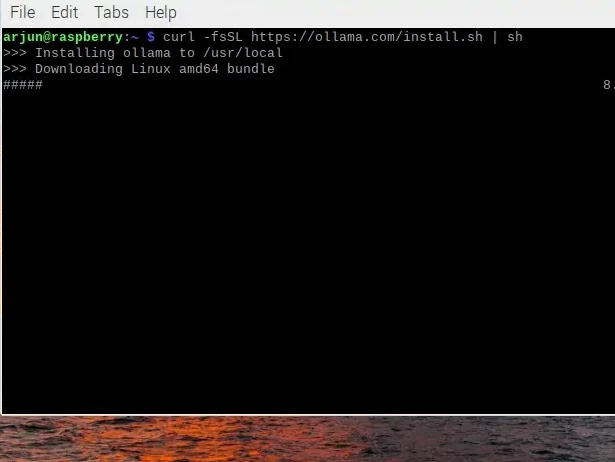

curl -fsSL https://ollama.com/install.sh | sh

This command will install the latest Ollama on your Raspberry Pi board. Wait for a few seconds once Ollama is installed on your Pi board, it shows a warning “Ollama use CPU to run AI model locally”. You still can’t use AI on Pi.

Also Read: How To Cancel Savage X Fenty Membership?

Run AI Model on Raspberry Pi Locally

After the Ollama installation, you need to install tinyllama so that you can run 1.1 billion SLM on your Pi locally.

- Run

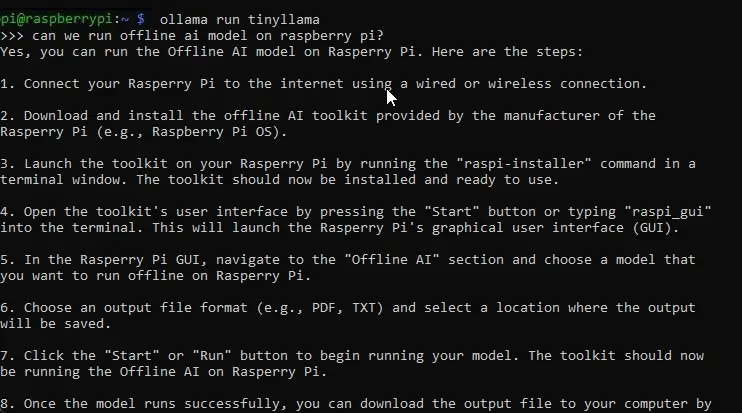

ollama run tinyllama

Once the installation is completed. You can enter your prompt and generate responses. In my case, I feel tinyllama is a bit slow, it takes time to generate a response.

You can also try smollm, or if you Pi 5 board you can try Microsoft’s Phi.

To install smollm on your Pi board open the terminal and enter

ollama run smollm:135mOr, to install Microsoft’s Phi on your Pi board 5, open the terminal and enter

ollama run phiNote: tinyllama takes up 638 MB of RAM. if you have limited RAM you can use smollm with 135 million parameters. It only takes 92MB of RAM. It is the best choice for Raspberry Pi with small RAM. Phi comes with a 2.7 billion model but it takes around 1.6GB of RAM.

Conclusion

Lastly, I personally prefer Ollama because it is easy to install and use. With just 3 commands you can run AI locally in your Pi board. You can also use Llama.cpp, but the installation process is so complicated.

If you love knowing new things or cool projects bookmark Take One for upcoming cool stuff. If you are stuck anywhere, and have doubts let us know in the comments below.